Blog > Ai leadership

Two examples of AI agent management working

AI agent management improves resolution rate and CSAT for AI agents, reducing the workload for a human support team while freeing up time for the support team manager.

Properly-managed AI agents also improve the performance of the human agents by collecting new context on complex issues, making it easier for a human support agent to resolve level 2 and level 3 messages in an effective way for the customer.

Here are two specific examples of an AI agent management process improving overall team efficiency and effectiveness.

Example #1 - Premium outerwear brand maintains CSAT while increasing AI share of resolutions from 37% to 66%

This outerwear brand increased AI agent share of resolutions from 37% to 66% for all inbound messages, while maintaining an overall CSAT score of 4.65/5.

The Influx AI agent management team performed the following:

Daily (human) AI review

Daily automated AI review

Weekly testing of new questions

Weekly testing of new context, created by AI manager

Weekly updates to context & AI prompts, by AI manager

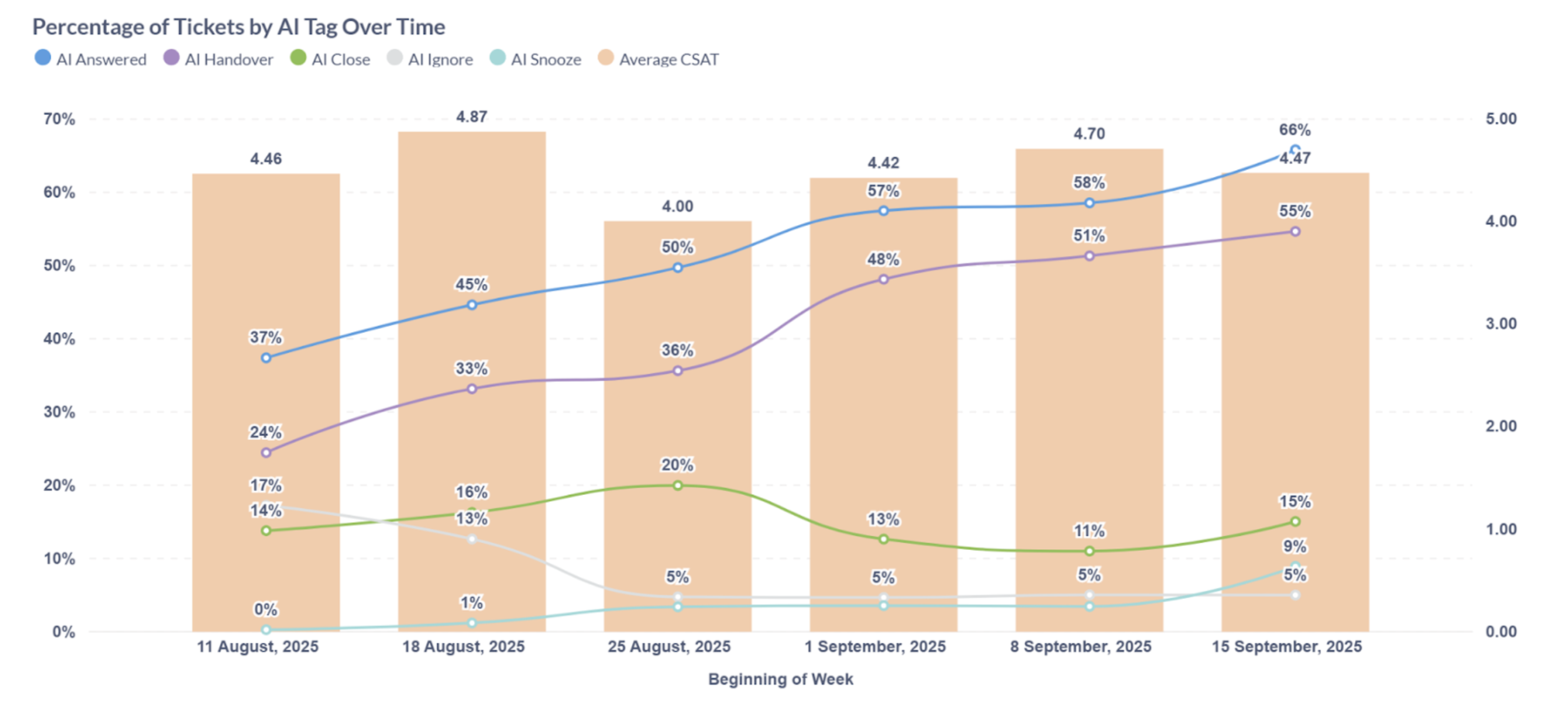

Performance overview

This data shows how AI agent management is consistently working to handle more customer interactions while maintaining a high standard of quality. It demonstrates a mature system that's being steadily expanded without significant drop in customer satisfaction.

The data confirms the core philosophy that the AI's role is to act as a strategic collaborator, not a replacement for human agents.

Key performance insights

The graph's trends offer specific insights into the AI agent's effectiveness and its collaborative role:

Growing AI Engagement: The percentage of tickets handled by the AI, the ai_answered rate, shows a significant increase, rising from 37% to 66% over the period. This indicates that the AI's scope is being broadened to engage with a progressively larger share of customer interactions.

Consistent Customer Satisfaction: Despite the expanding role of the AI, the Average CSAT remains stable and high, consistently in the 4.6 to 4.7 range. This is the most important insight, as it confirms that the increase in AI-handled tickets is not compromising the quality of the customer experience. The system is proving its ability to scale without sacrificing service quality.

Strategic Handoffs: The ai_handover rate fluctuates but remains a key part of the workflow. It rises to a high of 72.3% and then drops to 65.5% before increasing again, but it consistently represents a substantial portion of all tickets. This shows that the AI is not designed to unilaterally close tickets but to triage and prepare them for human agents, a crucial aspect of the human-in-the-loop model. The AI focuses on routing tickets to the right agent with the necessary context, ensuring a smooth and efficient transition.

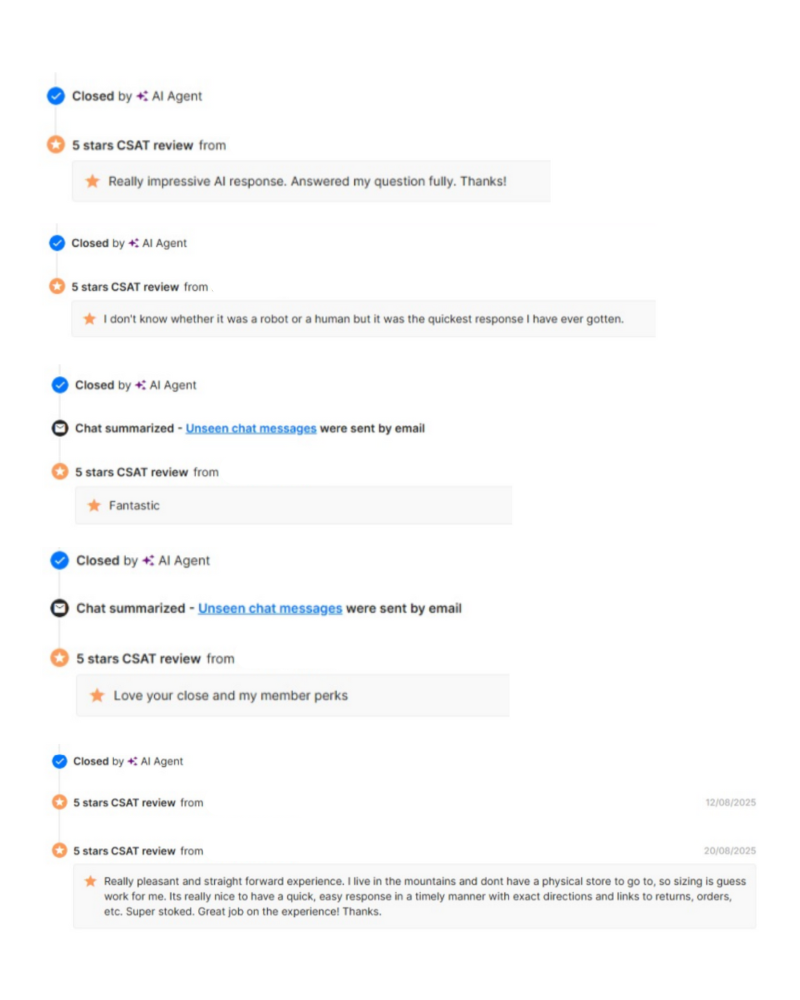

Customer testimonials

Example #2 - Leading supplements brand improves both CSAT and AI agent share of resolutions

This leading supplement brand improved AI agent resolution rate from 28% to 37%, while improving combined CSAT from 4.07/5 to 4.70/5. This shows a successful shift from human-first to AI-first, then consistently lifting CSAT for a more efficient CX system.

The Influx AI agent management team performed the following:

Daily (human) AI review

Daily automated AI review

Weekly testing of new questions

Weekly testing of new context - created by AI manager

Weekly updates to context & AI prompts - by AI manager

Performance Overview

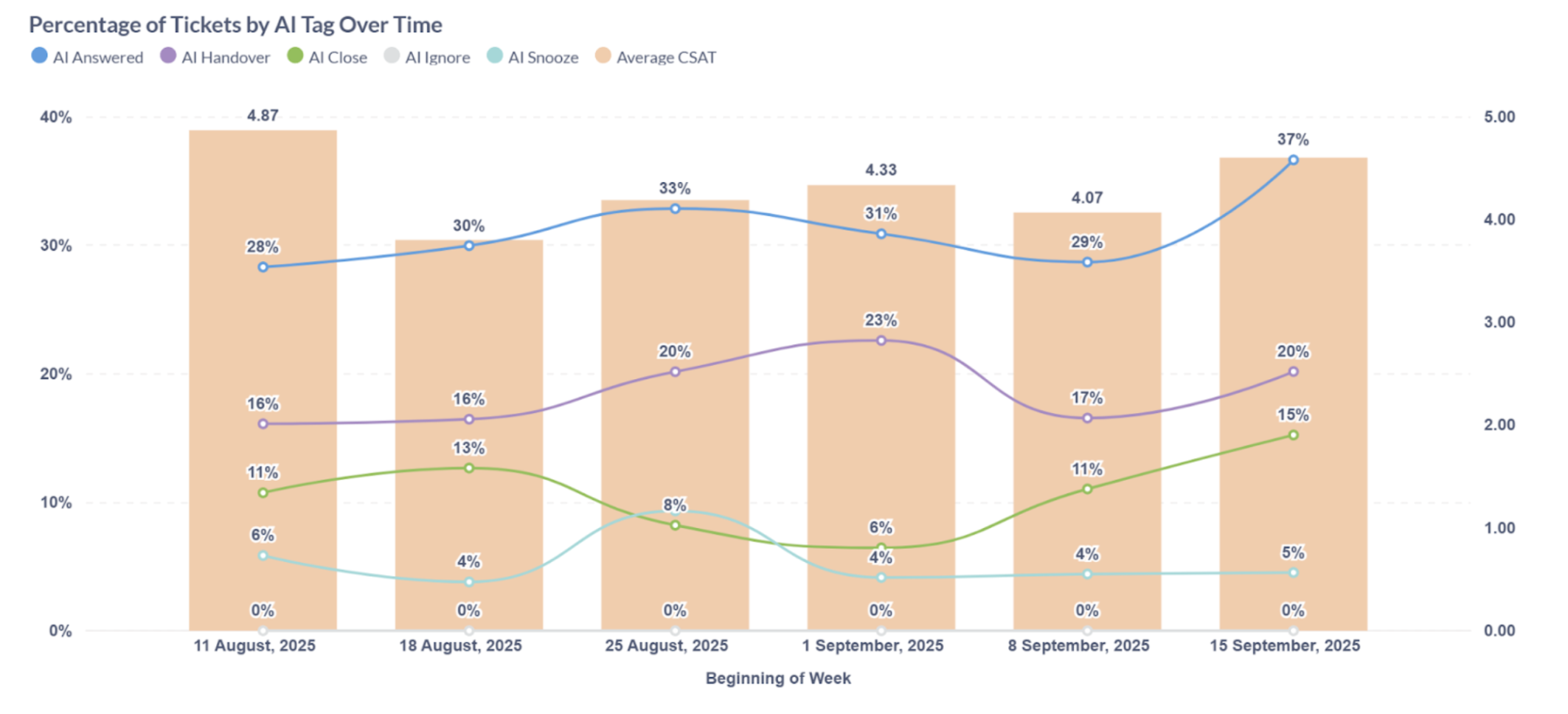

The data demonstrates the successful implementation of Influx's "Continuous AI-Human Improvement Loop." It shows that an initial dip in performance, likely caused by expanding the AI's scope, was successfully addressed through a managed, iterative process.

The data confirms that the AI learned to be more discerning, focusing on strategic information gathering and intelligent handovers, which ultimately led to a significant recovery in customer satisfaction. The low closure rate reinforces the core philosophy that this is a system built for powerful AI-human collaboration, not just for simple ticket automation.

Overall performance and the CSAT trend

The graph tracks the AI agent's performance over a six-week period, and the most crucial metric is the Average CSAT. Its trend tells the central story of the AI's evolution. The CSAT starts at a high of 4.87, dips significantly to a low of 4.07, and then recovers to 4.70. This dip and recovery is a textbook example of the "Continuous AI-Human Improvement Loop" in action.

Initial Drop: The sharp decline in CSAT from August 18 to September 1 coincides with a significant increase in the AI Answered percentage, which rises from 30% to 33% (+10%). This suggests that as the AI's scope was expanded to engage with a broader range of customer queries, there was an initial period where its responses were not yet fully optimized, leading to a temporary decrease in customer satisfaction.

Partial Recovery: The subsequent strong recovery of the CSAT score (from 4.07 to 4.70) indicates that the refinement process worked. The AI was successfully optimized to become more helpful, thereby winning back customer trust and satisfaction.

The AI's evolving behavior: A deeper look at the metrics

The other metrics provide the "how" behind the CSAT trend, illustrating exactly how the AI's behavior changed over time.

AI Answered: The percentage of tickets where the AI sent a response shows a steady increase, rising from 28% to 37% (+32%). This confirms that the AI is being deployed to engage with a progressively larger share of customer interactions, rather than immediately routing them to human agents.

AI Snooze: This metric, defined as the AI actively gathering information for excellent support, is a key indicator of the system's maturity. The AI Snooze percentage rises significantly, from 6% to 15% (+150%). This is a critical insight, showing that the AI is evolving from giving incomplete or potentially unhelpful responses to focusing on gathering the right information for a better outcome. The increase in this metric is directly linked to the recovery of the CSAT score.

AI Handover: The percentage of tickets strategically transferred to human agents shows a general increase from 16% to 20% (+25%). When viewed alongside the rising CSAT, this suggests the handovers are becoming more effective. The AI is learning when its expertise is not sufficient and is setting up human agents for success by providing them with better-prepared context.

AI Close and AI Ignore: These metrics remain consistently low or at 0% for the entire period. This is not a sign of failure but a clear indication that the system's primary goal is not full automation. The low AI Close rate shows that the focus is on a strategic, collaborative model rather than simply closing tickets without human intervention. The 0% AI Ignore rate suggests that there are either no business policies yet requiring an immediate human bypass or that the AI is being trained to handle all initial customer queries.

In conclusion

AI agent management improves or maintains CSAT while increasing share of volume handled by an AI agent. When managed at a high standard, with consistent monitoring, testing and iterative launches, AI agents turn a pretty good support function into an exceptional support function.

6 Circle - small.png)